Most chatbot vendors are evaluated using signals that would fail any serious internal review. Confident demos, polished decks, familiar client logos. None of these explain how intelligence is governed, how failure is diagnosed, or how cost and accuracy behave under real-world pressure when selecting an AI Chatbot Development Company.

A chatbot is a delegated decision system, not a feature. It speaks in your voice, touches live data, and operates continuously without supervision. That demands a different evaluation posture. You do not listen for conviction. You examine controls, accountability, and operational discipline. Who owns conversational breakdowns? How intelligence quality is measured. What happens when automation degrades over time in large-scale enterprise chatbot development environments?

This checklist applies an auditor’s lens to AI chatbot development companies delivering structured AI chatbot development services. It inspects the people assigned to your account, the technical foundations behind their claims, and the delivery system that determines whether outcomes are repeatable.

Checklist Section 1: Skills Audit

Look past the portfolio. The single greatest predictor of your project’s success is the team assigned to build it. Before you talk about technology, audit the people first. This checklist examines the six essential roles a true partner must have on the bench, especially if you plan to hire chatbot developers for long-term custom chatbot development services.

Conversational Designer (not a UX designer rebranded)

- Covers: Intent mapping, dialogue flow design, persona architecture, and fallback logic. They script conversations, turn by turn.

- What to ask: “Can you show me a conversation flow document from a past enterprise client?”

- Red flag: The role is handled by a content writer or product manager. This is a dedicated skill.

NLP/NLU Engineer with Production Experience

- Covers: Intent classification, entity extraction, and managing context windows. They specialize in reducing AI inaccuracies.

- What to ask: “How do you handle low-confidence user inputs? What is your escalation design?”

- Red flag: They only use out-of-the-box NLP with no ability to customize models for your domain.

LLM Integration Engineer

- Covers: Prompt engineering, RAG pipeline architecture, and token cost optimization. This is crucial for modern, knowledgeable chatbots.

- What to ask: “Walk me through your retrieval strategy for a domain-specific knowledge base.”

- Red flag: Their experience is limited to basic API calls without deeper retrieval or fine-tuning work.

Enterprise Integration Architect

- Covers: Deep experience with CRM, ERP, and enterprise system APIs. They ensure secure, real-time data flow.

- What to ask: “What was your most complex multi-system integration and the achieved data latency?”

- Red flag: Integrations are built from scratch each time with no reusable connector framework.

QA Engineer Specialized in Conversation Testing

- Covers: Testing intent accuracy, edge case responses, and multi-turn context retention.

- What to ask: “What conversation testing framework do you use? What’s your target accuracy benchmark?”

- Red flag: General software QA handles conversation testing. The approaches are fundamentally different.

MLOps / AI Ops Profile

- Covers: Post-deployment model monitoring, drift detection, retraining cycles, and performance dashboards.

- What to ask: “What does your model performance dashboard track? How do you define a regression?”

- Red flag: This role isn’t defined. Chatbots degrade without active operations.

Checklist Summary Box

A credible AI Chatbot Development Company fields all six roles as dedicated team members. If three or more are absent or vaguely assigned, it is a serious risk. Your project’s foundation is the team building it.

When selecting an experienced AI chatbot development company, businesses should assess technical expertise, LLM capabilities, and real-world deployment experience.

Checklist Section 2: Stack Audit

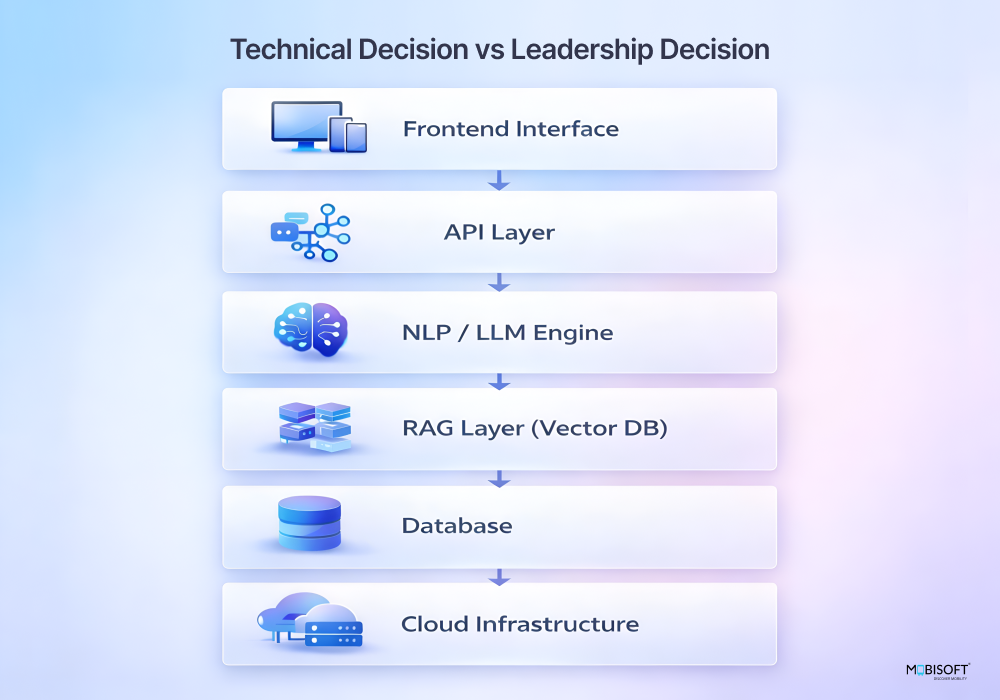

A company’s chosen technology stack is a direct reflection of its operational maturity within an AI Chatbot Development Company. The right tools are not simply the latest ones. They represent a series of deliberate, defensible decisions meant to ensure your chatbot survives real-world use. Let’s audit these five non-negotiable layers.

LLM Strategy: Multi-Model or Single-Vendor?

- Covers: Intelligently routing queries. Perhaps GPT-4 handles complex reasoning, while a streamlined model like Llama manages high-volume, simpler tasks. This is cost and performance optimization in action.

- What to ask: “How do you determine which LLM handles a specific type of user question?”

- Red flag: Exclusive reliance on one LLM vendor. It suggests a lack of strategic depth and will inflate your costs.

RAG Architecture for Knowledge Grounding

- Covers: Moving beyond static FAQs. It connects your chatbot to live knowledge bases using vector search through vector database integration, enabling a Retrieval-Augmented Generation (RAG) chatbot that understands semantic meaning, not just keywords.

- What to ask: “Describe your process from uploading a company document to generating a verified answer from it.”

- Red flag: Any mention of managing knowledge purely through manual FAQ entry. That approach is obsolete for enterprise needs and weakens scalable enterprise chatbot development.

NLP/NLU Framework Choices

- Covers: Utilizing frameworks that allow for specific customization. The ability to fine-tune a model on your industry’s jargon is what creates true understanding.

- What to ask: “Have you performed parameter-efficient fine-tuning (PEFT) for a client? What was the outcome?”

- Red flag: A company that is purely a “platform shop,” like only using Dialogflow. It limits your ability to solve unique problems.

Integration Layer Architecture

- Covers: Building with enterprise-grade protocols and security from the start. Think OAuth, webhooks, and pre-built adapters for common systems, not custom code for every connection.

- What to ask: “How is real-time data synced from our CRM into an active conversation?”

- Red flag: Every integration is described as a custom development project. It points to a lack of reusable, tested components.

Security and Compliance Architecture

- Covers: Embedding security into the application layer. This means data encryption, strict access controls, and formal compliance certifications, not just relying on cloud provider promises.

- What to ask: “Beyond hosting, what application-level security measures protect conversation data?”

- Red flag: A vendor who states, “We use AWS, so it’s secure.” That is a fundamental misunderstanding of shared responsibility.

Observability and Monitoring Infrastructure

- Covers: Having clear visibility into how the chatbot performs daily. It involves tracking accuracy, user sentiment, speed, and operational costs on a dedicated dashboard.

- What to ask: “What key performance indicators do you monitor on day one post-launch?”

- Red flag: Analytics are treated as a phase two deliverable. This means they have not operated a chatbot at scale.

Checklist Summary Box

A sophisticated stack is built for endurance. If a potential partner struggles to explain their LLM routing logic or their approach to model monitoring, they are likely constructing a prototype, not a production system. Their technical choices ultimately determine your solution’s longevity and reliability within structured AI chatbot development services.

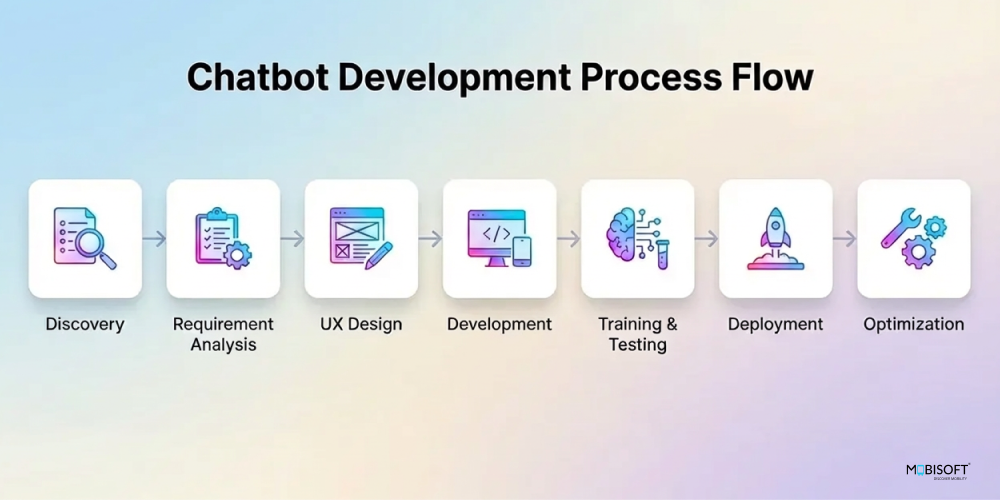

Checklist Section 3: Delivery Process Audit

The final and perhaps most telling audit examines operational discipline within an AI Chatbot Development Company. Brilliant teams can be hamstrung by chaotic processes. This evaluation focuses on the procedural backbone that ensures consistent, high-quality delivery and long-term viability. A mature system here is often the clearest indicator of true enterprise readiness in structured AI chatbot development services.

Structured Discovery Phase (Minimum 2 Weeks)

- The Real Test: This phase should challenge your assumptions. A proficient partner uses discovery to pressure-test your initial use cases with real data, often proposing a more focused, valuable starting point. It’s a strategic alignment exercise critical to successful enterprise chatbot development.

- What to ask: “Based on our conversation logs, which use cases would you advise against automating first, and why?”

- Red flag: The discovery deliverable is a verbatim restatement of your initial RFP. It shows that no analytical value was added.

Conversation Design-First Workflow

- The Real Test: This is about separating architecture from implementation. The conversation design document should be a comprehensive contract that engineers execute against.

- What to ask: “What is your change control process if we need to alter a finalized dialogue flow during development?”

- Red flag: The conversation designer is also the front-end developer. This creates an inherent conflict where technical ease can trump user experience.

Intent Accuracy Benchmarking in Every Sprint

- The Real Test: It’s not just about hitting a number like 92%; it’s about the methodology. How is the test dataset curated? Do they measure precision and recall? The process for improving a low score is more important than the score itself.

- What to ask: “If intent accuracy drops in a sprint, what is your structured, three-step investigative process to diagnose the cause?”

- Red flag: Benchmarks are run against the same static test set every time, leading to overfitting and a false sense of security.

Human-in-the-Loop Escalation Design

- The Real Test: A sophisticated design doesn’t just hand off; it provides a summary. The system should condense the interaction context for the human agent, diagnosing where the automation failed. This turns a handoff into a learning opportunity.

- What to ask: “What summary of the failed interaction does your system provide to the human agent who takes over?”

- Red flag: Escalation is treated as a simple “transfer to queue” function. The human agent receives no context, frustrating both the user and the agent.

UAT with Real End-Users

- The Real Test: The goal is to measure cognitive load, not just correctness. How long does a user hesitate? How many rephrases do they attempt? Quantitative metrics from these sessions are what inform final refinements in serious custom chatbot development services engagements.

- What to ask: “Beyond task completion, what behavioral metrics do you capture during user testing sessions?”

- Red flag: The feedback mechanism is purely qualitative, like a thumbs-up/down button, with no structured analysis of why.

Phased Rollout Protocol

- The Real Test: Each phase should have a distinct, non-functional goal. The pilot might test infrastructure stability; the beta might measure user adoption behavior. The criteria to proceed should be different for each stage in mature AI automation solutions

- What to ask: “What is the single primary learning objective for our limited beta group that differs from the internal pilot?”

- Red flag: The rollout plan is purely calendar-driven (e.g., “two weeks per phase”) rather than metric-driven.

Post-Launch Support SLA

- The Real Test: The SLA should distinguish between reactive support and proactive evolution. A mature partner commits to scheduled model health audits and presents performance trend analyses, treating the contract as a foundation for continuous improvement.

- What to ask: “What specific insights will your quarterly business review include, beyond uptime statistics?”

- Red flag: The post-launch plan is exclusively focused on break-fix support, with no mention of iterative model refinement or strategic reviews.

Checklist Summary Box

A deliberate process converts vision into value systematically. It is the antidote to unpredictability. When a company can articulate not just what they do but why each step exists and how each gate protects your investment, you are no longer buying a project. You are engaging a partner for the lifecycle of your solution with a capable AI Chatbot Development Company.

What Strong Chatbot Partners Reveal Early?

You can learn a great deal about a potential partner from the questions they ask and the details they volunteer before any agreement is signed with an AI Chatbot Development Company. One strong indicator is a focus on your data readiness. A proficient company will want to examine your existing conversation logs and knowledge base structure early on. This shows they grasp a fundamental truth: the chatbot’s intelligence is built upon your information.

When evaluating their past work, look for case studies that move beyond client praise within structured AI chatbot development services. The most convincing evidence includes specific performance data, such as achieved intent accuracy rates or the percentage decrease in escalation volumes. These metrics demonstrate measurable results.

Also, value a partner that offers a perspective on technology selection, particularly in LLM-powered chatbot development. They should advise you on the suitability of different LLMs for your specific tasks, balancing cost and capability. This guidance is a mark of technical confidence.

Finally, clarity on project completion is essential. The proposal should define clear acceptance criteria, like target performance benchmarks and support protocols, ensuring both parties share the same definition of success from the outset, a standard expectation in mature enterprise chatbot development engagements.

Partnering with a full-scale AI Development Company ensures your chatbot can evolve into intelligent AI agents with automation and predictive capabilities.

Turning Vendor Evaluation Into Due Diligence

This audit framework does more than filter vendors. It redefines the selection conversation, moving from generalized promises to specific, evidence-based evaluation of an AI Chatbot Development Company. In 2026, the leading enterprises have already adapted to this model of due diligence.

The checklist is your tool for deeper inquiry. Its true purpose is to initiate a dialogue that reveals a partner’s operational depth and strategic thinking across AI automation solutions. The most capable companies will not shy away from these questions. They will engage with them thoroughly, often adding their own critical layers to your audit.

This level of scrutiny is now the baseline for mitigating risk and ensuring alignment. It is what separates a transactional project from a strategic partnership built to evolve through structured AI agent development services. Let this structure guide your discussions, and let the quality of the answers you receive guide your final, confident decision.

Key Takeaways

- Chatbot failures usually trace back to weak vendor selection, not model capability or launch execution in AI chatbot development services.

- Evaluating a chatbot company requires auditing roles, accountability, and operational controls, not demos or slide decks.

- Dedicated ownership across conversation design, NLP, integration, QA, and AI operations determines long-term reliability.

- Production-ready stacks prioritize model routing, retrieval discipline, monitoring, and cost control over novelty in LLM-powered chatbot development.

- Delivery maturity shows up in discovery rigor, benchmarking discipline, and metric-driven rollout decisions.

- Post-launch governance is essential because chatbot intelligence degrades without continuous oversight.

- Vendors unable to explain their technical tradeoffs clearly should not be considered.

Frequently Asked Questions

When should technical scrutiny begin in the vendor selection process?

Ideally, before pricing enters the discussion. Early technical scrutiny exposes integration limits, data dependencies, and operational assumptions that sales conversations skip. When these gaps surface late, teams either accept hidden risk or restart evaluation under pressure.

How can buyers tell if a chatbot partner plans for intelligence decay?

Ask how performance changes are tracked over months, not weeks. Mature partners expect accuracy drift, rising edge cases, and evolving user language. They plan monitoring, retraining, and review cycles upfront instead of treating decline as unexpected failure.

Does industry experience matter more than system complexity exposure?

Industry familiarity helps with terminology, but complexity exposure matters more. Vendors who have navigated tangled CRMs, identity layers, and data latency understand failure patterns. That experience carries across industries far better than surface-level domain knowledge.

What determines whether chatbot costs stay predictable after launch?

Cost stability depends on model routing decisions, escalation logic, and how knowledge retrieval is designed. Vendors who explain cost behavior under traffic spikes and expanded usage show foresight. Flat estimates without usage scenarios usually break down in production.

Why does chatbot support differ from traditional application support?

Chatbots respond to human behavior, not static inputs. As users adapt, phrasing changes and edge cases multiply. Ongoing oversight is required to protect accuracy and trust. Treating support as break-fix only ignores how language systems evolve daily.

Can a lean vendor team still deliver enterprise-grade chatbots?

Yes, if responsibilities are clearly separated and owned. Small teams with dedicated roles often outperform larger groups with blurred accountability. Enterprises should examine who owns conversation quality, integration reliability, and ongoing performance before assuming scale equals readiness.